Affective Head: Realtime Transformations of 3D Portrait with Emotional Speech

SCI6338: Intro to Computational Design I Fall 2019

Advisor: Jose Luis García del Castillo y López

Teammates: Beilei Ren & Haoyu Zhao

Project Overview

Our faces are distorted when we have extreme emotions. Many of us choose to hold them back. However, we believe emotions are better to be expressed, so people around us can detect and respond. “Affective Head” is a design experiment that transforms 3d-scanned human heads to disclose invisible emotions. With a real-time speech recognizer, participants can talk about their feelings and trigger the transformation with certain affective words. Each word in the list, e.g. happy, sad, angry, scared, has the according transformation, including geometric interference with parameters and color change. Welcome to the world of explosive and trembling heads.

Intro: Facial Expressions of Emotions

Human faces are distorted when we have emotions.Some of them cannot be detected by others, while some can. In this design experiemnt, we are trying to exaggerate the face distortions with four typical emotions: happy, sad, scared and angry. In this initial phase, we imagine four according ways of transformation:swinging for happyness, downward melting for sadness, trembling for fear and exploding for anger, that can be realized with parametric interference on the 3d-scanned head mesh.

Detecting Emotional Words

The first part of the project is real-time speech recognition. With Google Audio, the sentences that the participant is saying are translating into strings. The strings are later input into a customized gh component that detecting if there is “happy”, “sad”, “scared”, and “angry” in the string, and output double 1, 2, 3, and 4 accoringly for next steps.

Data Flow

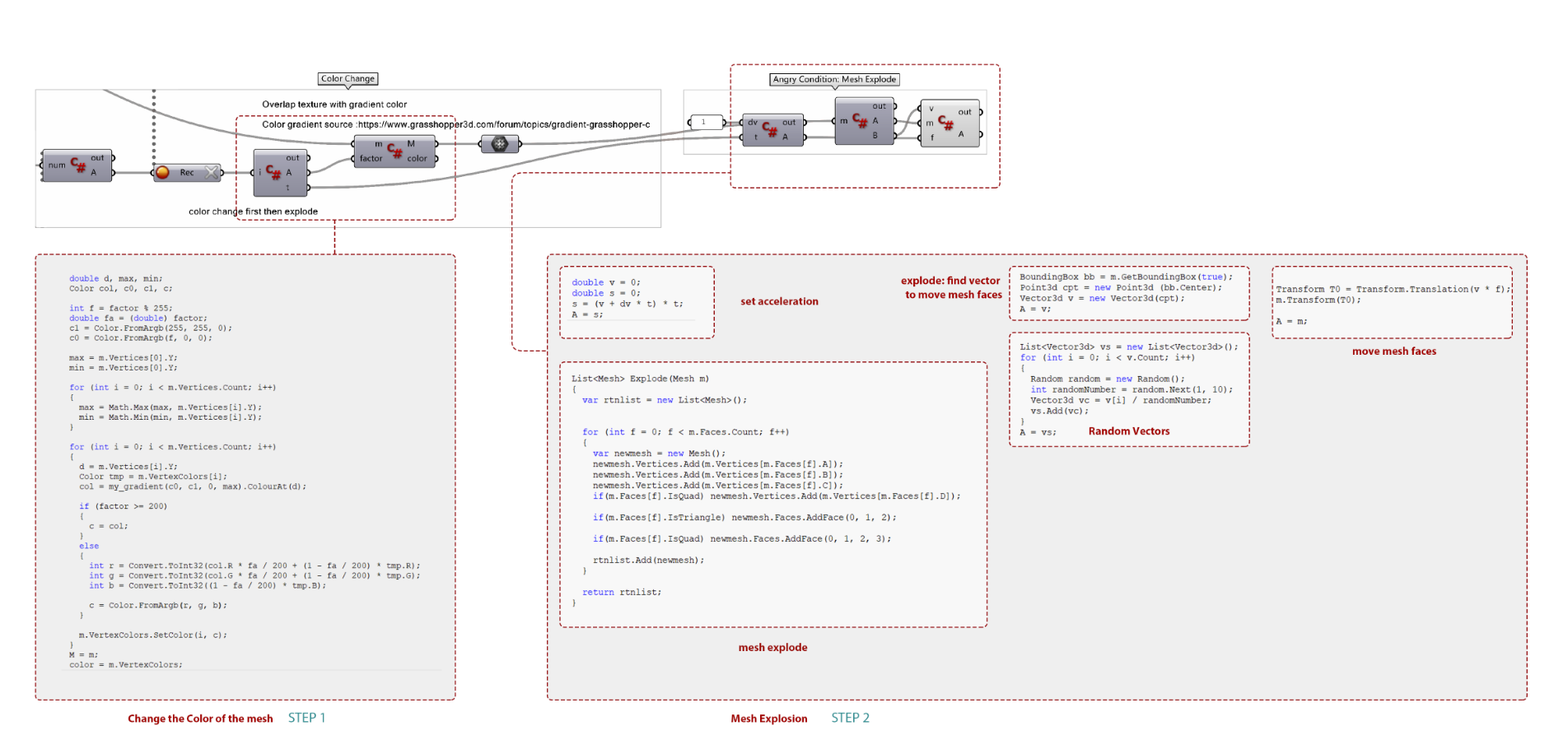

The following grasshopper definition and data flow chart show the goal for eaach step, as well as the inputs and outputs. We firstly import speech and mesh input into Grasshopper, and create parametric geometric interference and color change for each emotion. All the transformations can be achieved with real-time speech input.

The following grasshopper definition and data flow chart show the goal for eaach step, as well as the inputs and outputs. We firstly import speech and mesh input into Grasshopper, and create parametric geometric interference and color change for each emotion. All the transformations can be achieved with real-time speech input.

3D Scan Head

By using 3d scan technology, we transform my head into digital model and refine it with the total mesh number, the vertexes, the smoothness and the texture.

“Happy” Transformation

With different speech input, different according transformations are generated responsively in Grasshopper: when the word “Happy” is recognized, the 3d-scanned mesh is turned into a swinging behavior.

“Sad” Transformation

When the word “sad” is recognized, the face becomes melting downward and the color of the face gradually become blue, showing a very down emotion.

“Scared” Transformation

When the word “scared” is recognized, the face shape is computed to be trembling.

“Angry” Transformation

When the word “angry” is recognized, the face firstly turns red, and then explode into ash. Please join us to play!